NVIDIA Unveils Next-Gen AI Supercomputer Chips

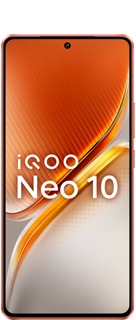

Hello Iqoo fans,

NVIDIA has introduced its next generation of AI supercomputer chips, promising to revolutionize deep learning and large language models (LLMs) like OpenAI's GPT-4.

Hopper Architecture Powers New Advancements

These cutting-edge chips, built on NVIDIA's "Hopper" architecture, are designed to deliver substantial performance gains for AI applications.

HGX H200 GPU: A Game-Changer for Large Language Models

At the heart of this innovation is the HGX H200 GPU, NVIDIA's first chip to incorporate HBM3e memory. This new memory boasts enhanced speed and capacity, making it ideal for large language models.

Accelerated AI Training and Inference with Tensor Core Architecture

The HGX H200 also features an advanced Tensor Core architecture, specifically engineered to expedite AI training and inference tasks. NVIDIA claims that this new chip can double the inference speed of its predecessor, the H100 GPU.

GH200 Grace Hopper Superchip: A Supercomputer Powerhouse

NVIDIA's GH200 Grace Hopper "superchip" is another groundbreaking product, seamlessly integrating the HGX H200 GPU with the Arm-based NVIDIA Grace CPU via the company's NVLink-C2C interlink. Designed for supercomputers, the GH200 is poised to empower scientists and researchers in tackling some of the world's most complex challenges.

Transforming AI Hardware and Industry Leadership

NVIDIA's new AI supercomputer chips represent a significant leap forward in AI hardware development. They hold immense potential for a wide range of applications, including data centers, supercomputers, and cloud computing.

Moreover, these advancements are expected to solidify NVIDIA's position as the leading provider of AI hardware, further widening the gap between the company and its competitors.

Please sign in

Login and share