Nvidia’s Chat with RTX: A Personalized AI Chatbot

Hello Questers!

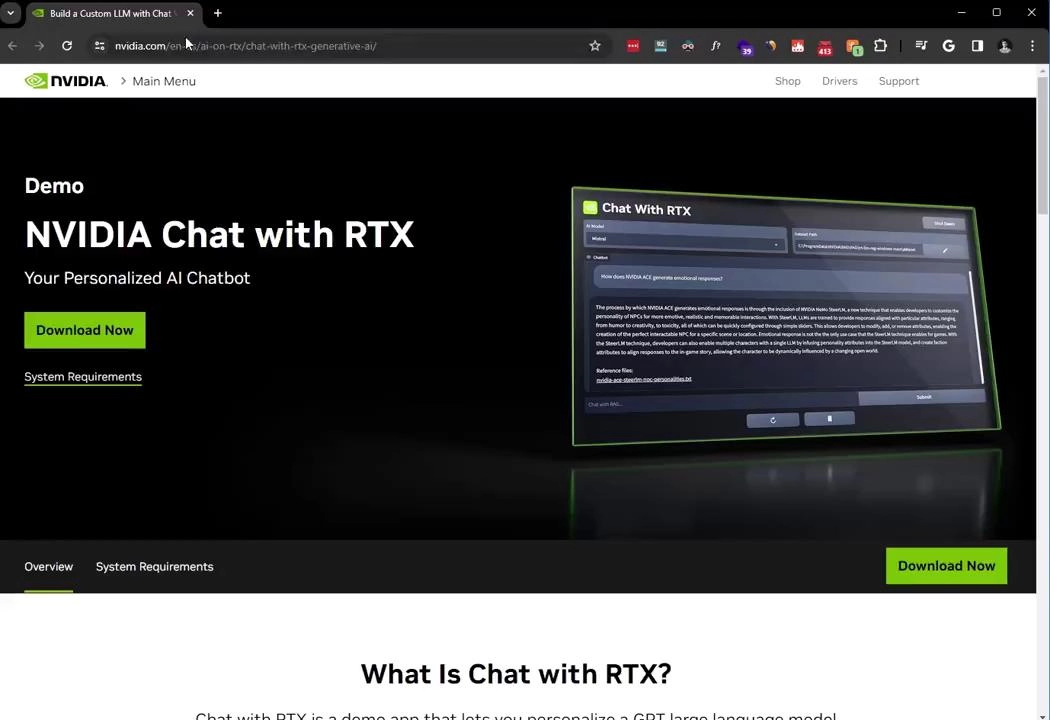

Nvidia has recently launched a new feature called Chat with RTX, a demo app that allows you to personalize a GPT large language model (LLM) connected to your own content.

What is Chat with RTX?

Chat with RTX is a demo app that lets you personalize a GPT large language model (LLM) connected to your own content—docs, notes, videos, or other data. It leverages retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration, allowing you to query a custom chatbot to quickly get contextually relevant answers.

How Does It Work?

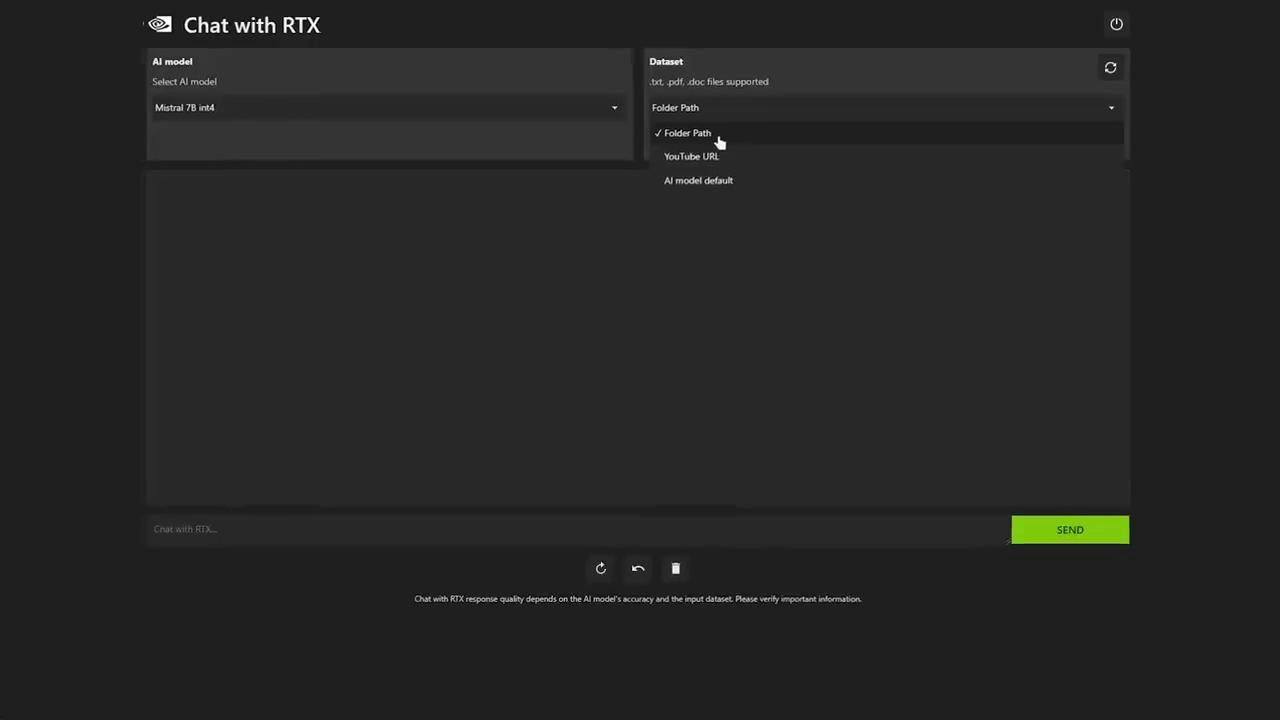

The tool supports various file formats, including .txt, .pdf, .doc/.docx and .xml. You can point the application at the folder containing these files, and the tool will load them into its library in just seconds. Users can also include information from YouTube videos and playlists. Adding a video URL to Chat with RTX allows users to integrate this knowledge into their chatbot for contextual queries.

Privacy and Security

Since Chat with RTX runs locally on Windows RTX PCs and workstations, the provided results are fast — and the user’s data stays on the device. This means you can process sensitive data on a local PC without the need to share it with a third party or have an internet connection.

System Requirements

Chat with RTX requires a GeForce RTX 30 Series GPU or higher with a minimum 8GB of VRAM, Windows 10 or 11, and the latest NVIDIA GPU drivers.

For Developers

The app is built from the TensorRT-LLM RAG developer reference project, available on GitHub. Developers can use that reference to develop and deploy their own RAG-based applications for RTX, accelerated by TensorRT-LLM.

Some Amazing Features

• Runs Locally on Your PC: Chat with RTX runs locally on Windows RTX PCs and workstations. This means the application is fast, and your data stays on your device.

• Get Answers from YouTube: You can include information from YouTube videos and playlists. Adding a video URL to Chat with RTX allows you to integrate this knowledge into your chatbot for contextual queries.

• Choosing an AI Model: Chat with RTX leverages retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration. This allows you to query a custom chatbot to quickly get contextually relevant answers.

• Choosing a Dataset: The tool supports various file formats, including .txt, .pdf, .doc/.docx and .xml. You can point the application at the folder containing these files, and the tool will load them into its library in just seconds.

How to install

Visit https://www.nvidia.com/en-us/ai-on-rtx/chat-with-rtx-generat and download the package and install it on your PC.

Read the system requirement before downloading.

Conclusion

Nvidia’s Chat with RTX represents a significant step forward in the field of AI, bringing the power of a personalized GPT chatbot to your local machine. It’s a promising tool for anyone looking to leverage the power of AI in their daily tasks.

For the most up-to-date information, please visit the official Nvidia website.

Thanks for reading & see you in next amazing thread 🧵

Signing Off

Your QOOL Quester

Please sign in

Login and share