Unlocking the Future of Development with Gemini! ✨

Greetings of the day Questers!!

Developers are being given the power to build the future of AI with cutting-edge models, intelligent tools to write code faster, and seamless integration across platforms and devices. Since last December when Gemini 1.0 was launched, millions of developers have used Google AI Studio and Vertex AI to build with Gemini across 109 languages.

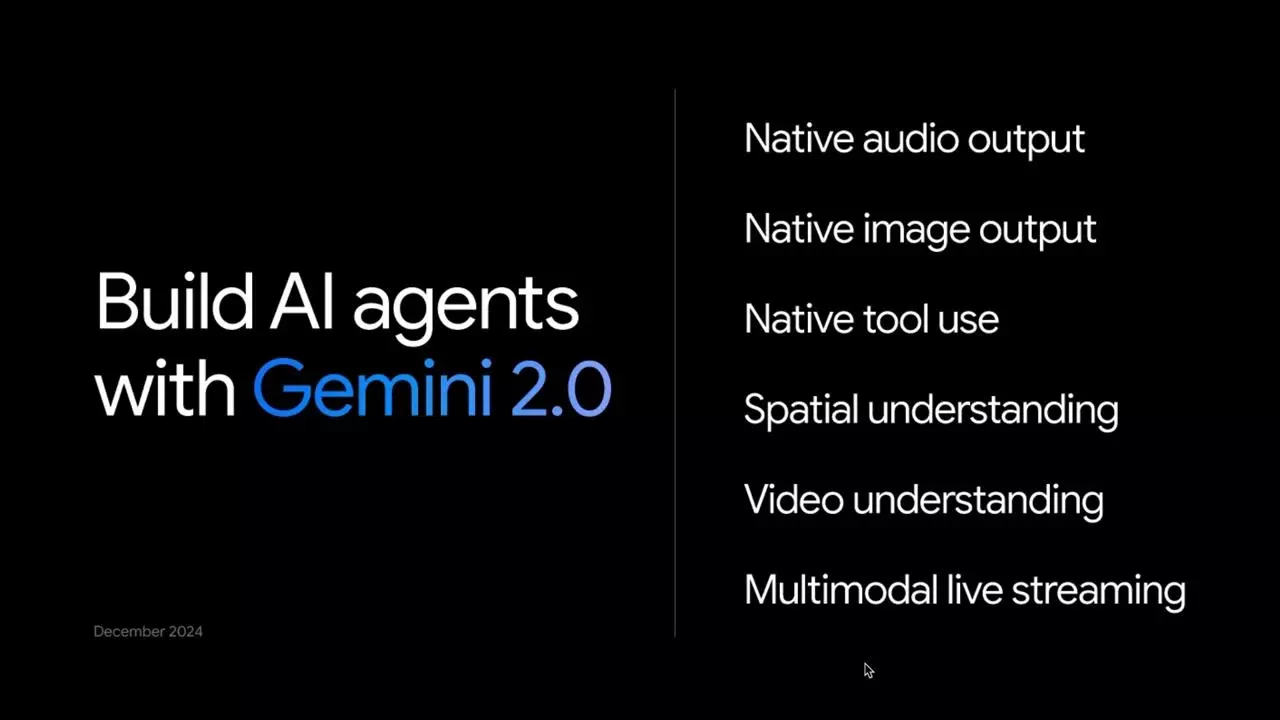

Today, Gemini 2.0 Flash Experimental is being announced to enable even more immersive and interactive applications, as well as new coding agents that will enhance workflows by taking action on behalf of the developer.

Build with Gemini 2.0 Flash

Building on the success of Gemini 1.5 Flash, Flash 2.0, which is twice as fast as 1.5 Pro while achieving stronger performance, is being introduced with new multimodal outputs and native tool use. A Multimodal Live API for building dynamic applications with real-time audio and video streaming is also being introduced.

Starting today, Gemini 2.0 Flash can be tested and explored by developers via the Gemini API in Google AI Studio and Vertex AI during its experimental phase, with general availability coming early next year.

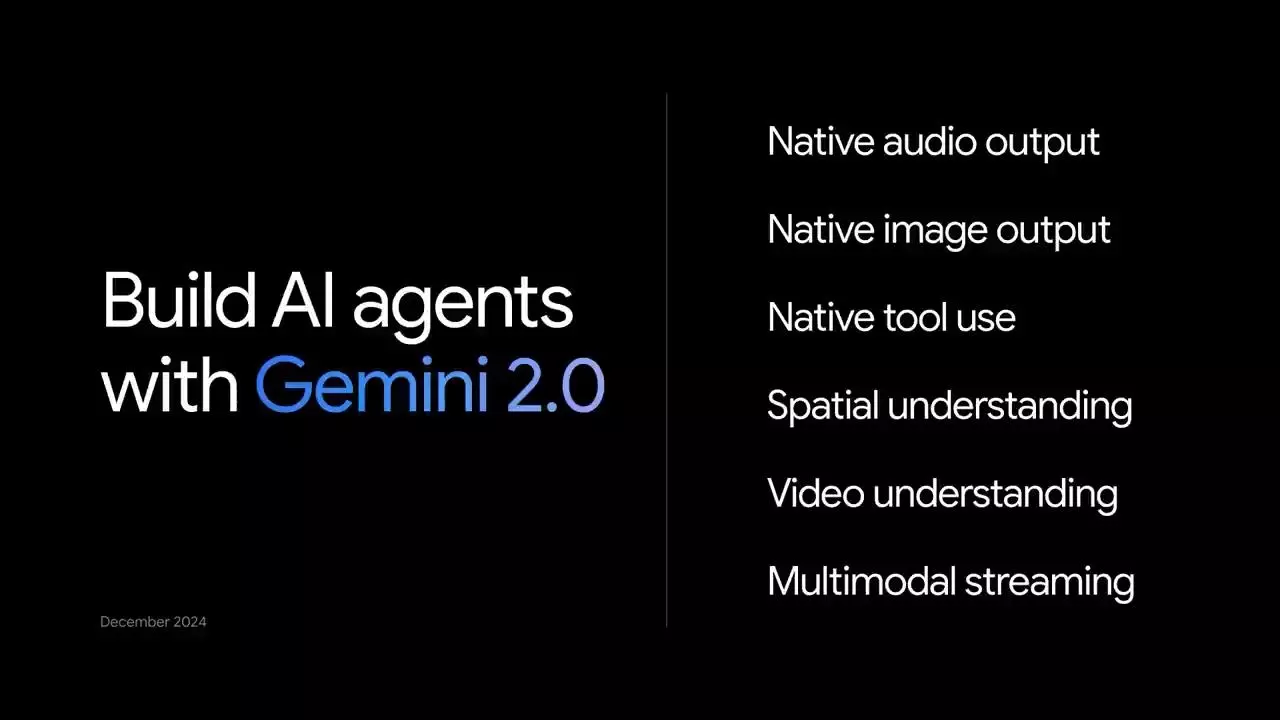

With Gemini 2.0 Flash, developers have access to:

1. Better performance

Gemini 2.0 Flash, while still delivering on the speed and efficiency expected from Flash, is more powerful than 1.5 Pro. It also features improved performance on key benchmarks across multimodal, text, code, video, spatial understanding, and reasoning. Improved spatial understanding enables more accurate bounding box generation on small objects within cluttered images, and enhanced object identification and captioning. To learn more, watch the spatial understanding video or read the Gemini API docs.

2. New output modalities

Gemini 2.0 Flash will enable developers to generate integrated responses comprising text, audio, and images through a single API call. These new output modalities are accessible to early testers, with broader availability anticipated next year. SynthID invisible watermarks will be integrated into all image and audio outputs, mitigating concerns about misinformation and misattribution.

Gemini 2.0 Flash incorporates native text-to-speech audio output, granting developers granular control over both the content and delivery of the model's speech. This feature offers a selection of 8 high-quality voices across various languages and accents. Experience native audio output in action or delve deeper into the developer docs.

Gemini 2.0 Flash now natively generates images and supports conversational, multi-turn editing, facilitating iterative refinement of outputs. It can produce interleaved text and images, making it suitable for multimodal content like recipes. Explore further in the native image output video.

3. Native tool use

Gemini 2.0 has been trained to utilize tools, a fundamental capability for creating agentic experiences. It can natively invoke tools such as Google Search and code execution, alongside custom third-party functions through function calling. Leveraging Google Search natively as a tool results in more factual and comprehensive responses, while also increasing traffic to publishers. Multiple searches can be conducted concurrently, leading to enhanced information retrieval by identifying more relevant facts from diverse sources simultaneously and combining them for accuracy. To delve deeper, watch the native tool use video or commence building from a notebook.

4. Multimodal Live API

Developers can now construct real-time, multimodal applications incorporating audio and video-streaming inputs sourced from cameras or screens. Natural conversational patterns, including interruptions and voice activity detection, are supported. The API facilitates the integration of multiple tools within a single API call to address complex use cases. For further insights, explore the multimodal live streaming video, experiment with the web console, or leverage the starter code (Python).

Google is thrilled to see impressive progress being made by startups utilizing Gemini 2.0 Flash, prototyping new experiences like tldraw's visual playground, Viggle's virtual character creation and audio narration, Toonsutra's contextual multilingual translation, and Rooms' addition of real-time audio.

To jumpstart the building process, three starter app experiences have been released in Google AI Studio, alongside open-source code for spatial understanding, video analysis, and Google Maps exploration, enabling users to commence building with Gemini 2.0 Flash.

The Next Level of AI Code Assistance with Gemini 2.0

Google is pushing the boundaries of AI code assistance. It's gone from simple searches to powerful assistants integrated into your workflow. Google is excited to unveil a new advancement powered by Gemini 2.0: coding agents that handle tasks on your behalf.

Introducing Jules, Your AI Code Partner

Imagine finishing a bug fix sprint and facing another long list. Now, you can delegate Python and Javascript coding tasks to Jules, an experimental code agent powered by Gemini 2.0. Jules works asynchronously within your GitHub workflow, tackling bug fixes and repetitive tasks while you focus on innovation.

Here's what Jules can do:

- Multi-step fixes: Creates comprehensive plans to address issues.

- Efficient modifications: Handles multiple file edits with ease.

- Pull request prep: Prepares pull requests to land fixes back in GitHub.

Early Access for Trusted Testers

Jules is currently in an early access phase for a select group of developers. Google will be opening it up to more users in early 2025. Sign up for updates on labs.google.com/jules [invalid URL removed].

Colab's Data Science Agent Gets Smarter

At I/O, Google introduced an experimental Data Science Agent on labs.google/code. This allowed users to upload datasets and get insights with a working Colab notebook in minutes. Google is thrilled with the positive feedback and the impact it's having.

Now, Colab leverages the same agentic capabilities of Gemini 2.0. Simply describe your analysis goals, and watch Colab automatically generate a notebook, accelerating your research and data analysis. Developers can join the trusted tester program for early access before a wider rollout in the first half of 2025.

Empowering Developers to Build the Future

Gemini 2.0 empowers you to create amazing AI apps faster and easier. Look for Gemini 2.0 integrations coming to Android Studio, Chrome DevTools, and Firebase in the coming months. Sign up to use Gemini 2.0 Flash in Gemini Code Assist for enhanced coding assistance in popular IDEs like VS Code, IntelliJ, PyCharm, and more.

And that's it for this thread guys!

I hope this thread would be useful.

Hope you like the thread. 😊

Dont forget to drop a like and comment. It definitely appreciates me to make more informative threads like these. 🤩

Please share your thoughts in the comment section below 👇🏻

#iqoocommunity #iqooconnect #iqooconnect #iqooindia #iqoo

Follow me @DeepakBotu

Connect with me on Instagram @deepakbotu

Please sign in

Login and share